>This section showcases the projects I've done or worked on through the years

Portfolio Contents

- Data Consultant - What are we talking about?

- Startup & Entrepreneurship - Why and what is the purpose

- Trading - Opportunities and my strategy

- Datahub - A modern Data Integration Architecture for complex systems

- P2P - A legacy Data Integration Architecture for simple systems

- JIRA Automation - A REST API scenarios in database

Data Consultant

Being Data Consultant is, as expected, a generic term.

The reason to that can be explained by the different missions I can do for my clients. Depending on them, I'm changing the "hat" I wear so the skillset needs to be more "general" and less "specialized".

Some examples:

- Audit of a current platform

- Architecturing a new system

- Migration of a legacy system to a new one

- Training the team

- Designing a new data platform

- Supporting the team

- Maintenance of a current platform in production

- Documentation

- Proof-of-concept

- Adding monitoring and observability

- Optimising a database, a data pipeline, a data platform

- Doing code reviews and being in charge of the git

- Creating best practices and developmentstandards

- Being the lead for people to adopt best-practices

- Driving the team in a technical way

- And many more...

However, for the past 6 years now, my main title is "Technical Lead" where I'm responsible for the technical choices of the team and the link between the business and the engineers.

A role that requires a balance between the technical part and the business side. Average 30% of my time with the client, 50% with the team and last 20% for myself.

Startup & Entrepreneurship

I've been following the startup scene for many years, respectively in USA, Europe and Asia. Seen how, recently, startup using AI and data can achieve great success is truly motivating.

The amount of innovation, speed, success is impressive. Based on those results, the possibility to create using AI Agents, and a strong interest of creating my own software, I've decided to start my own adventure, as a Data Platform focused on Observability.

Among all the ideas around the web, Data is the evergreen topic on the IT field.

Any business selling online, any companies having applications (CRM, ERP, PIM, HRM, PMS, e-commerce platform etc.) are concerned by data. The field is vast, here's some examples:

- Supporting AI by providing quality and integration

- Supporting the development of the business by analyzing data downtime

- Enhance data quality

- Enable data synchronization

- Enhancing the supply chain by analyzing which part is weaker or needs to be improved

- And many more...

The field is so vast, from supporting AI by providing quality and integration, to supporting the development of the business by analyzing data downtime, data quality, data consistency, or enhancing the supply chain by analyzing which part is weaker or needs to be improved.

The possibilities are infinite.

At the end, all that matters is making sure companies are using their data to stay ahead of their competitors, satisfy their customers, and have insights on their business, based on logical and tangible results.

It's not just about the freedom and the excitement of doing things, but also to be part of the innovation and bringing new ideas for businesses.

Trading

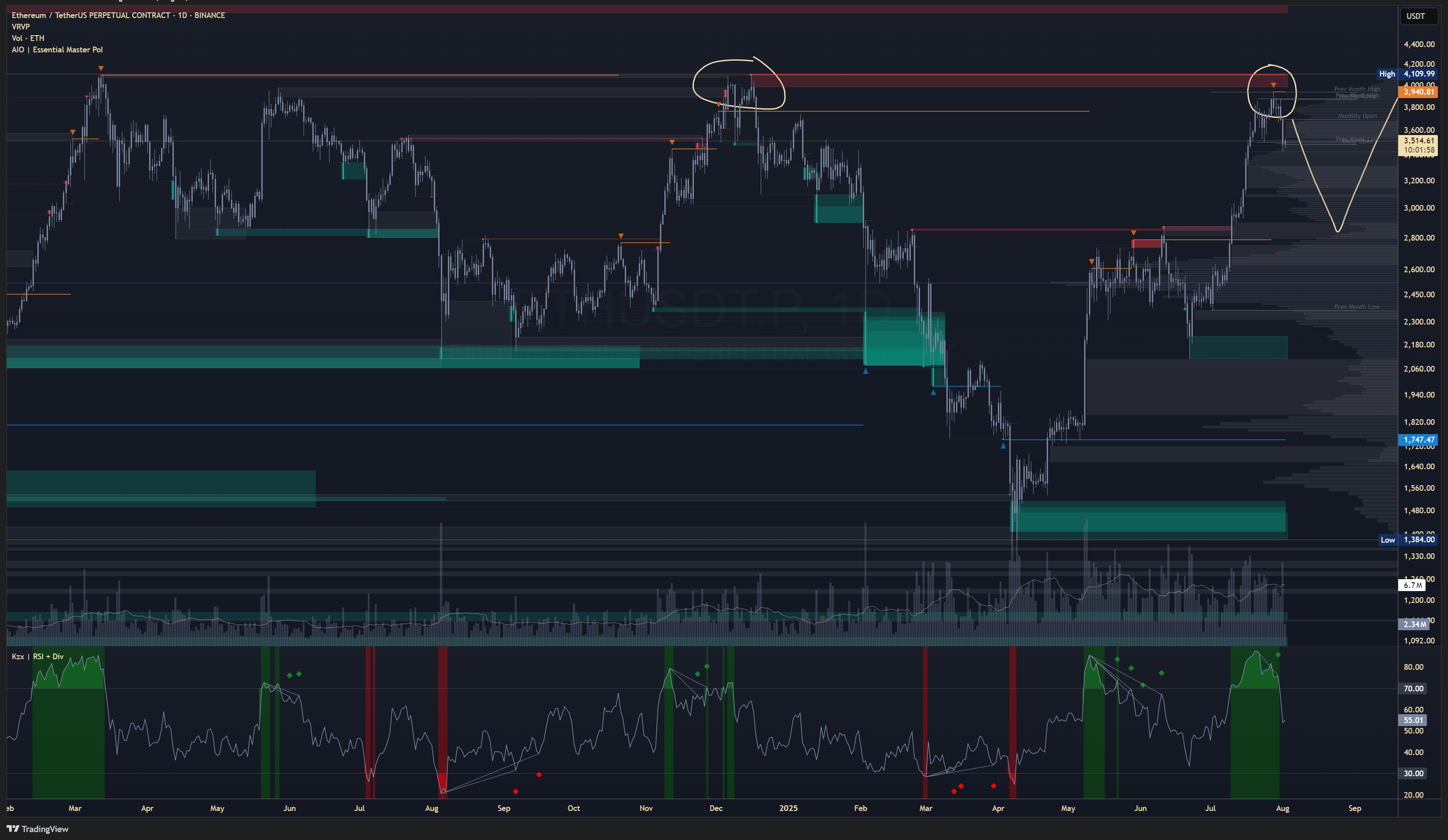

When the market offers good opportunities, I trade on the crypto market. I'm using my own trading indicators built on TradingView.

My strategy is based on high timeframe reversal (Daily or Weekly) to short the market. When a long uptrend start to show some signs of weakness, I'm starting to build a short position.

I'm using a combination of technical analysis and psychological analysis to make my decisions.

The main indicators I'm using are :

- VRVP

- Volume

- RSI + Divergence

- VWAP (Monthly or Weekly timeframe)

- A custom indicator with orderblock and liquidity, bollinger bands, FVG, exhaustion levels and timeframe level

Here's what it looks like :

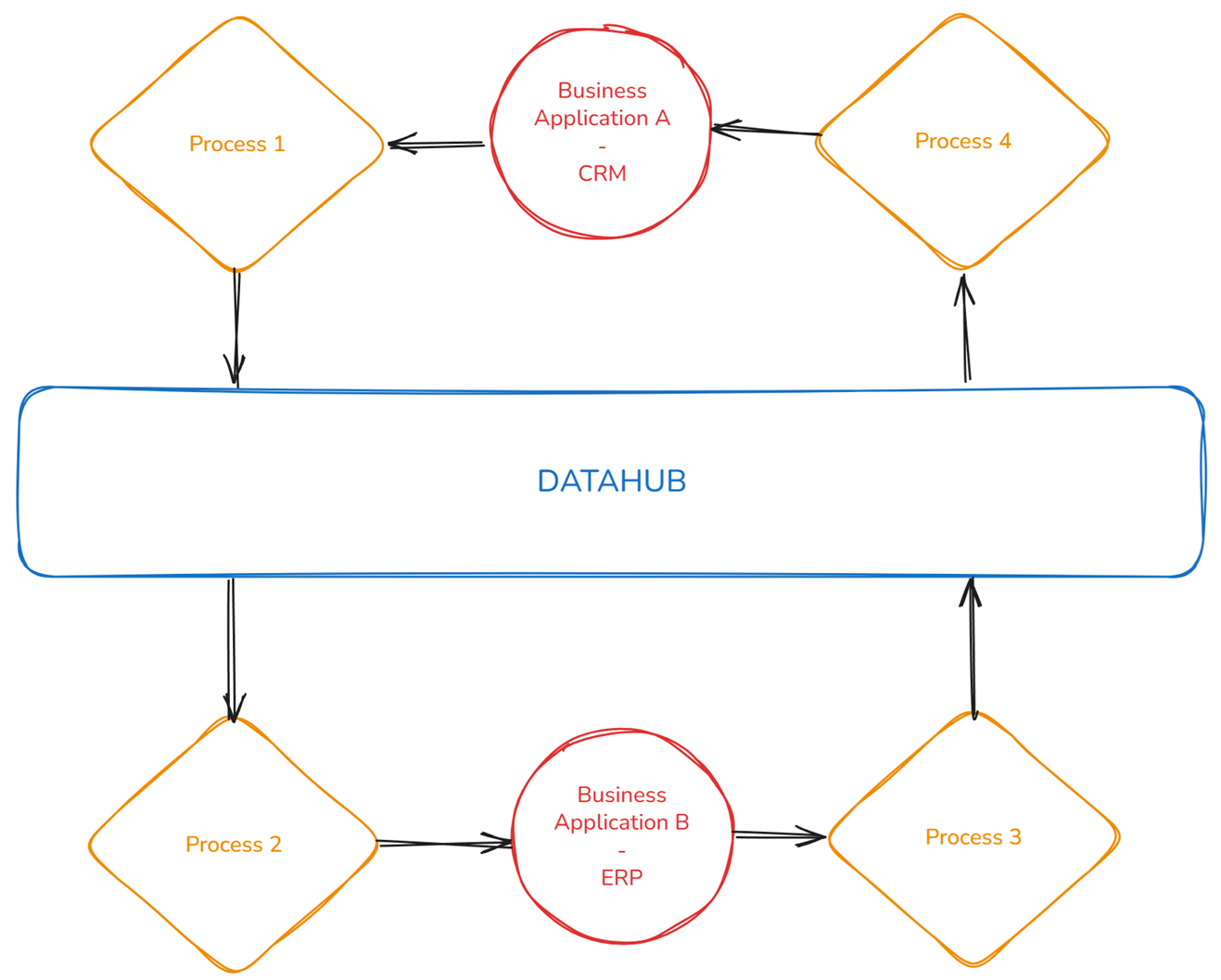

Datahub Architecture

This diagram shows my vision of a "datahub". Any person working in Data field, using dataflows, will have is own definition of what a datahub is.

Mine is describing the "core" functionalities : one place where all the data goes from source to target, processed, enriched, synchronized, stored and shown along the way.

The applications are connected to the datahub. The hub is the messenger from the source to the target applications.

The benefit of this architecture is reducting the number of applications to maintain, enhancing the security, data quality and data consistency.

The drawback of that, is the complexity of the architecture. Each integration of a new application needs to follow the exact process and architecture.

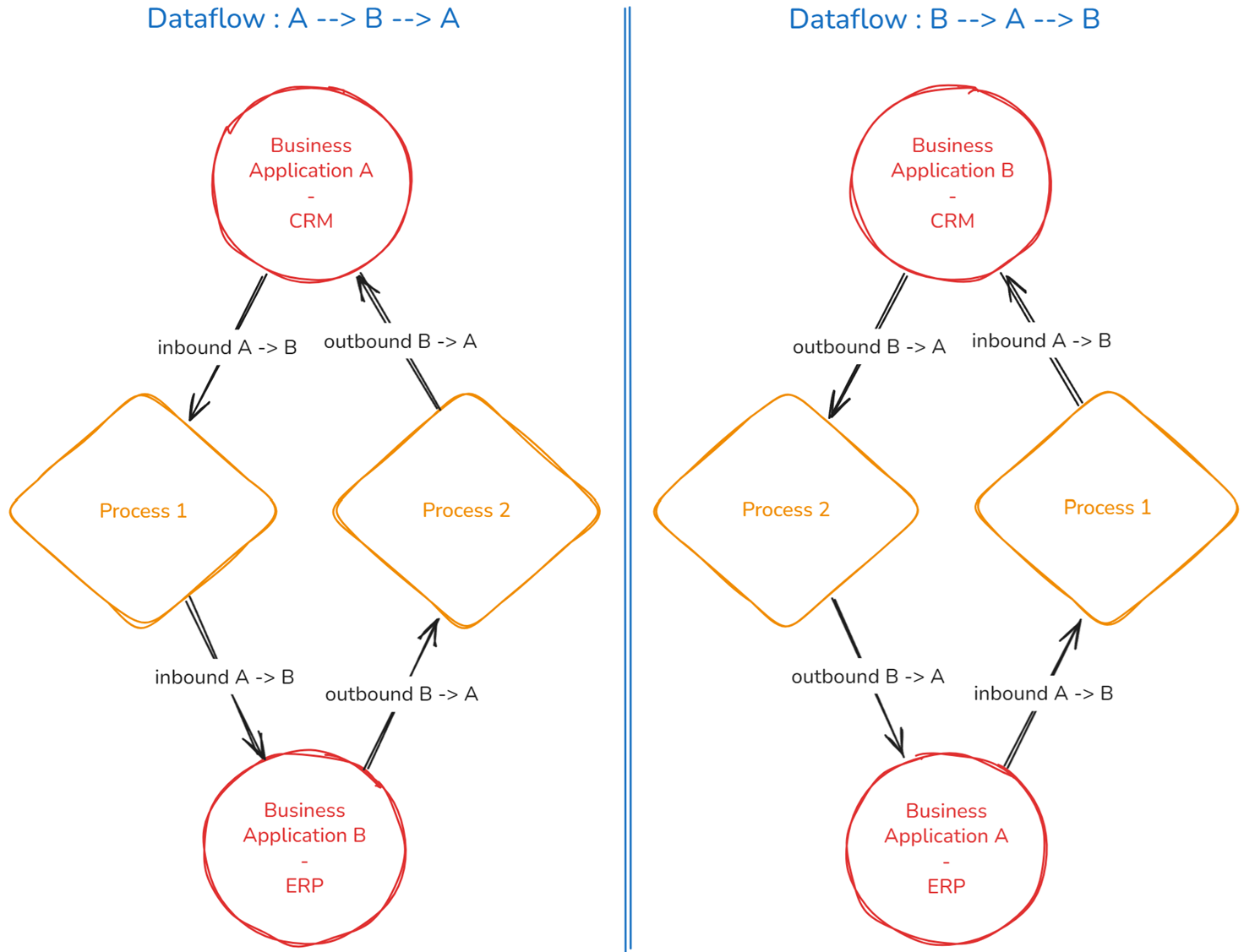

P2P Architecture

Here is what we can find as "data integration" system in the market. This example shows the business logic with ERP and CRM

ERP is the source or the target. CRM is the source or the target.

This P2P (peer-2-peer) architecture is not scalable and not flexible. It is not considered as "architecture" either because of the simplicity of the flow.

The benefit of this architecture is that it is easy to implement, easy to understand and faster to go in production. It is useful for small system, with few applications and few dataflows.

The drawback of that, is the maintenance, the scalability, the data consistence is poor and business rules from applications can be duplicated.

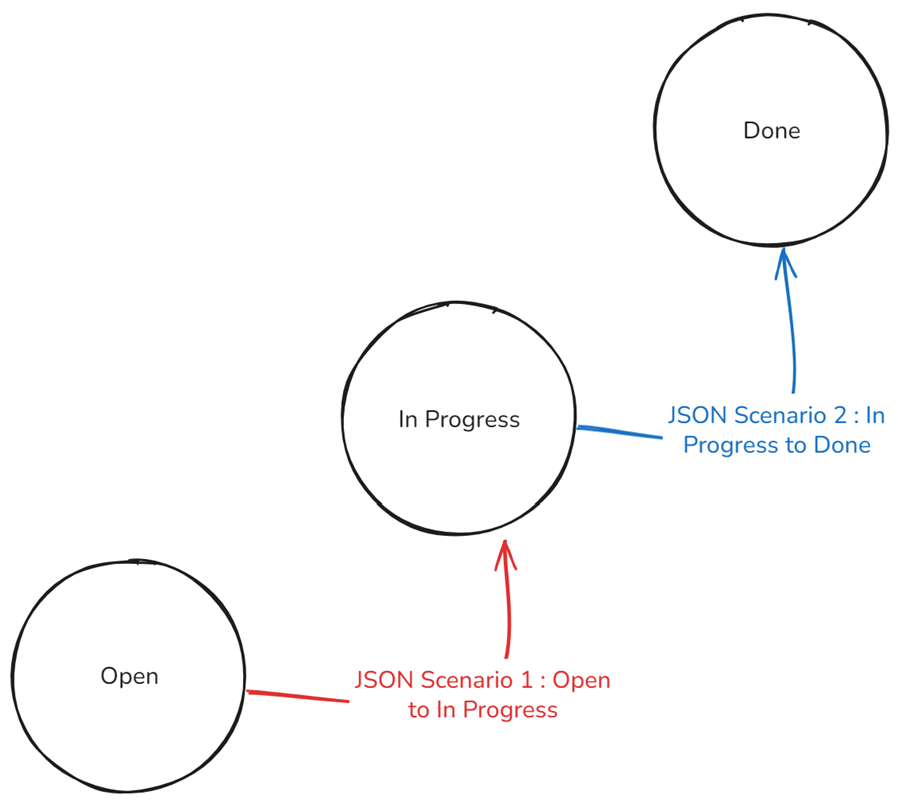

REST API Scenarios in database

This diagram shows a REST API scenario stored in a database to automate the process of creating JIRA issues.

A project I worked on required several steps to automate the creation of JIRA issues. Each JIRA status had to follow a specific workflow, with mandatory fields at each transition.

The solution was to define scenarios in a table, each representing a specific transition in the workflow.

Scenario 1: Transition from Open to In Progress.

Scenario 2: Transition from In Progress to Done.

When a user submits a new case and clicks "Validate" in the interface, the system checks the database to determine the current and next statuses.

With these two pieces of information, the API retrieves the JSON associated with the relevant scenario and updates the JIRA issue accordingly.

By designing the solution this way, if a workflow contains five steps, each step is handled individually by triggering the corresponding JSON. There are no chained scenarios; the workflow is defined by JIRA and maintained in the database.